I’ve tried to avoid writing about generative AI—with some success—but it’s more or less impossible not to read about it. I make no claim to comprehensiveness, but there are several essays I’ve found interesting, clever, or thought-provoking.

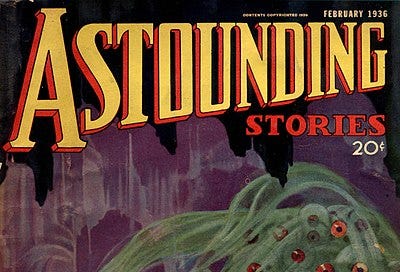

Two were written by Henry Farrell, a political scientist at Johns Hopkins, the first in collaboration with the CMU statistician, Cosma Shalizi. It explores the trope of the Large Language Model (LLM) as shoggoth—which naturally tempts the Lovecraft fan in me—but it looks back to the Industrial Revolution:

That was when we saw the first “vast, inhuman distributed systems of information processing” which had no human-like “agenda” or “purpose,” but instead “an implacable drive ... to expand, to entrain more and more of the world within their spheres.” Those systems were the “self-regulating market” and “bureaucracy.”

LLMs are not the first shoggoths, then, nor are they likely to rise up and take over:

Instead, they are another vast inhuman engine of i…

Keep reading with a 7-day free trial

Subscribe to Under the Net to keep reading this post and get 7 days of free access to the full post archives.